AI is quickly making its way into the technology stacks of enterprises. Chatbots, internal search, document automation, and analytics are only a few of the ways language models are transforming the way businesses are run. Nevertheless, the large language models (LLMs) do not necessarily fit the needs of enterprises because they are costly, are sensitive to privacy, and their infrastructure is complex.

Small Language Models (SLMs) are introduced at this point. Created to be lightweight, efficient and goal-oriented, SLMs provide enterprises with an opportunity to implement AI without the burden of large models. This guide defines the Small Language Models, their operation, advantages, disadvantages, practical applications, and the reasons why enterprises are moving to SLMs instead of the old model, which is LLM.

What Are Small Language Models (SLMs)?

Small Language Models (SLMs) are small AI models that are trained on smaller, more focused datasets to complete certain language-related tasks effectively. SLMs are trained to perform domain-specific applications as opposed to large language models that target a broad set of general-purpose problems.

SLMs normally have few parameters, less computational resources and are simpler to install and maintain. They can particularly be used in enterprise settings where predictable performance, cost management, and confidentiality of data are of more concern than overall intelligence.

Simply put, SLMs compromise on breadth and achieve more precision, making them best suited to business use cases.

How Small Language Models Work

Small Language Models follow similar principles as bigger models, which are natural language processing (NLP), embeddings, and pattern recognition, but in smaller sizes.

Major characteristics of how SLMs function:

- They are trained on curated and domain-specific datasets

- They are specialized in a particular task like classification, summarization, intent detection, or retrieval

- Enterprise data can be narrowed down at a rapid pace

- They are highly scalable on small hardware or cloud computing platforms

SLMs incur shorter inference time and require much lower energy than LLMs because they have fewer parameters. This enables them to be useful in real-time enterprise workflows.

SLMs vs LLMs: Practical Comparison in an Enterprise

Enterprises need to weigh other trade-offs besides raw intelligence when choosing between Small Language Models and Large Language Models.

Factors | Small Language Models (SLMs) | Large Language Models (LLMs) |

Model Size | Compact models with fewer parameters | Very large models with billions of parameters |

Infrastructure Ne eds | Can run on CPUs, edge devices, or lightweight cloud setups | Require high-end GPUs and cloud-scale infrastructure |

Deployment Cost | Low training, deployment, and inference cost | High infrastructure and API usage cost |

Inference Speed | Faster response times due to smaller size | Slower inference due to heavy computation |

Energy Consumption | Energy-efficient and environmentally friendly | High power consumption |

Data Privacy | Can be deployed fully on-premise with complete data control | Often rely on third-party APIs and external servers |

Compliance & Security | Easier to align with enterprise compliance standards | Compliance depends on vendor and data policies |

Best For | Enterprises needing cost-efficient, secure, and targeted AI | Applications requiring broad, general AI capabilities |

Examples of Small Language Models

Some popular examples of Small Language Models or compact models include:

- DistilBERT

- ALBERT

- TinyBERT

- MiniLM

- Domain-specific transformer models

These models are often scaled to enterprise NLP applications such as customer service automation, compliance analysis, and internal knowledge search.

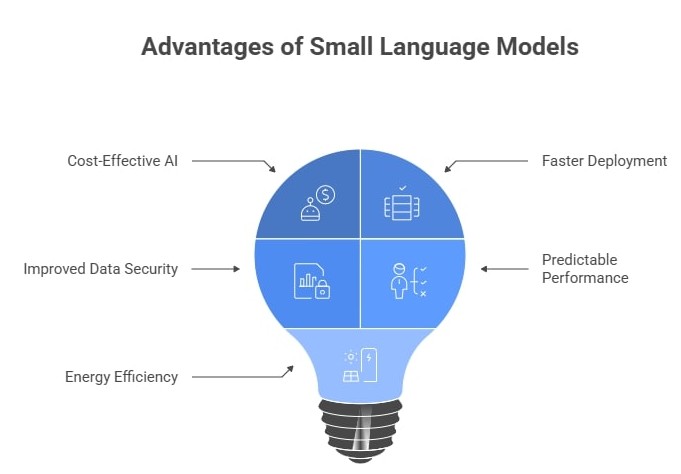

Benefits of Small Language Models

Small Language Models provide several benefits for enterprise AI adoption.

Cost-Effective AI

SLMs use fewer computational resources, resulting in significant savings in infrastructure and operational costs.

Faster Deployment

Their smaller size allows faster training, testing, and production rollout.

Improved Data Security

SLMs can be deployed on-premise or within private cloud environments, ensuring enterprise data remains protected.

Predictable Performance

Because SLMs are task-focused, their outputs are more stable and easier to evaluate.

Energy Efficiency

Lower power consumption makes SLMs a sustainable AI option.

Where SLMs Fall Short: Limitations You Should Know

Despite their advantages, Small Language Models are not a one-size-fits-all solution.

Key limitations include:

- Lower general knowledge compared to LLMs

- Reduced ability to handle complex, open-ended conversations

- Requires careful dataset curation

- Not suitable for creative or exploratory tasks

Enterprises should use SLMs within defined boundaries to avoid unrealistic expectations.

Small Language Models Use Cases and Examples

SLMs are already delivering value across industries.

Customer Support Automation

Intent detection, ticket routing, and FAQ automation using private enterprise data.

Enterprise Search

Semantic search across internal documents, policies, and knowledge bases.

Compliance and Risk Analysis

Text classification for regulatory documents and audit logs.

HR and Internal Tools

Resume screening, internal chatbots, and employee self-service systems.

Healthcare and Finance

Domain-specific text analysis where accuracy and data privacy are critical.

These use cases explain why SLMs are becoming central to enterprise AI strategies.

Future of SLMs in Enterprise AI

The future of Small Language Models depends heavily on enterprise needs. As organizations prioritize privacy, cost-efficiency, and control, SLM adoption will continue to grow.

Key trends include:

- Hybrid AI systems combining SLMs and LLMs

- Increased on-device and edge AI deployments

- Improved tools for monitoring and fine-tuning SLMs

- Industry-specific SLMs trained on proprietary datasets

SLMs are not replacing LLMs, but they are becoming a foundational component of enterprise AI.

Why Use Cleverbits for SLMs

Successful implementation of Small Language Models requires more than model selection. Enterprises need expertise in data preparation, model selection, deployment, and governance.

Cleverbits.ai builds enterprise-ready Small Language Models tailored to specific business needs. Their approach focuses on:

- Secure and private AI deployments

- Custom SLM development for domain-specific tasks

- On-premise and edge AI solutions

- Cost-optimized AI pipelines

- Compliance-first AI architecture

With Cleverbits.ai, enterprises can harness the power of SLMs without the complexity and risks associated with large-scale AI systems.

Frequently Asked Questions

1. Are SLMs cheaper than LLMs to run?

Yes. SLMs require significantly less compute power, making them more affordable to deploy and maintain.

2. Do SLMs require fine-tuning?

Often yes. Fine-tuning allows SLMs to perform accurately for enterprise-specific tasks.

3. Can SLMs run on-premise or on edge devices?

Absolutely. One of the biggest advantages of SLMs is their ability to run on-premise or on low-resource devices.

4. How do SLMs handle private enterprise data?

SLMs can be deployed in private environments, ensuring sensitive data remains secure and compliant.

5. Will SLMs replace LLMs in the future?

No. SLMs and LLMs will coexist, each serving different roles within enterprise AI ecosystems.

Conclusion

Small Language Models are reshaping enterprise AI adoption. By offering focused intelligence, lower costs, high performance, and strong data privacy, SLMs present a practical alternative to Large Language Models for business applications.

For organizations seeking scalable, secure, and cost-efficient AI, SLMs are not just an option but a strategic advantage. Partnering with the right expert, such as Cleverbits.ai, ensures AI solutions that deliver measurable business value without unnecessary overhead.